Audio2Face to UE Live Link Plugin#

Introduced in 2023.1.1

The Audio2Face Live Link Plugin allows creators to stream animated facial blendshape weights and audio into Unreal Engine to be played on a character. Either Audio2Face or Avatar Cloud Engine (ACE) can stream facial animation and audio. With very little configuration a MetaHuman character can be setup to receive streamed facial animation, but the plugin may be used with many other character types with the correct mapping pose assets.

Live Link Setup#

This section assumes that a MetaHuman project is the starting point. This ensures that there’s a valid character, Blueprints, and Live Link plugin enabled.

Install the Plugin#

Navigate to this directory in your Audio2Face installation folder (found within your Omniverse Library) and find the “ACE” directory.

Library\audio2face-2023.2\ue-plugins\audio2face-ue-plugins\ACEUnrealPlugin-5.3\ACECopy the “ACE” directory into the Plugins directory of your UE project.

Enable the Plugin#

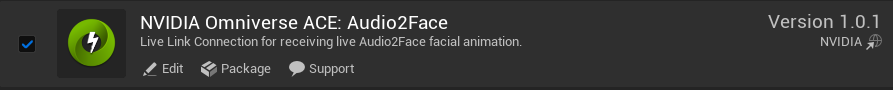

The Omniverse Audio2Face Live Link plugin must be enabled, do this from the Plugins tab in Unreal Engine:

(Optional) Update the AR Kit mapping Pose and Animation Sequence#

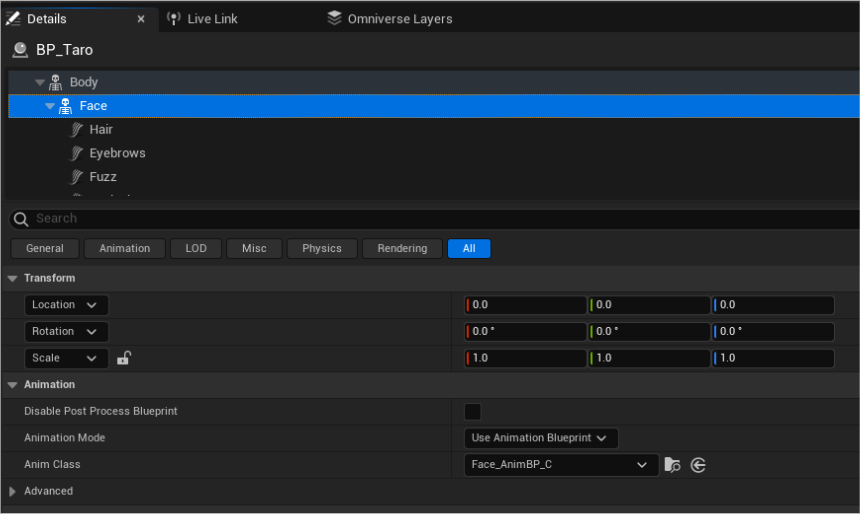

The Audio2Face team has modified the existing Pose and Animation Sequence assets from the MetaHuman project and they are included in the Audio2Face Live Link plugin’s Content folder. To modify, open the Face_AnimBP Blueprint. Select the MetaHuman Blueprint, then select Face in the Details tab. Click the magnifying glass next to the Face_AnimBP_C Anim Class. This will select that Blueprint in the Content Browser. Double click it to open.

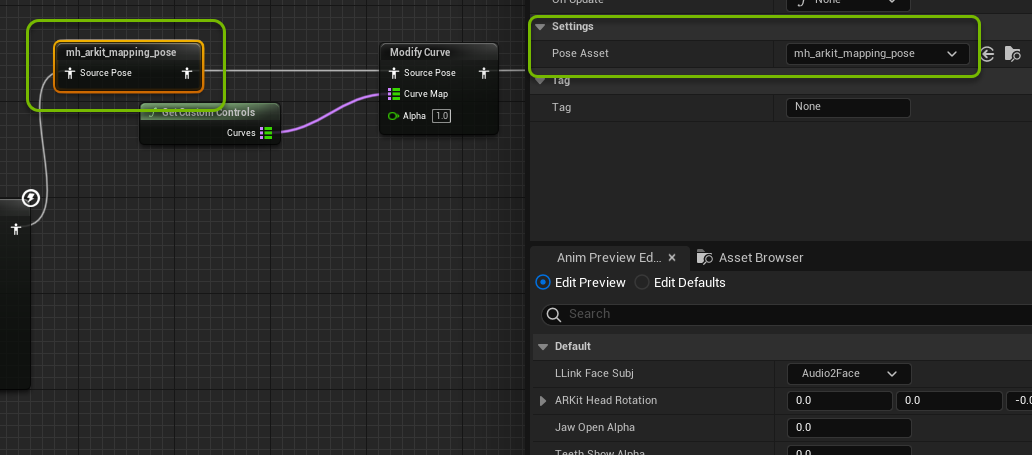

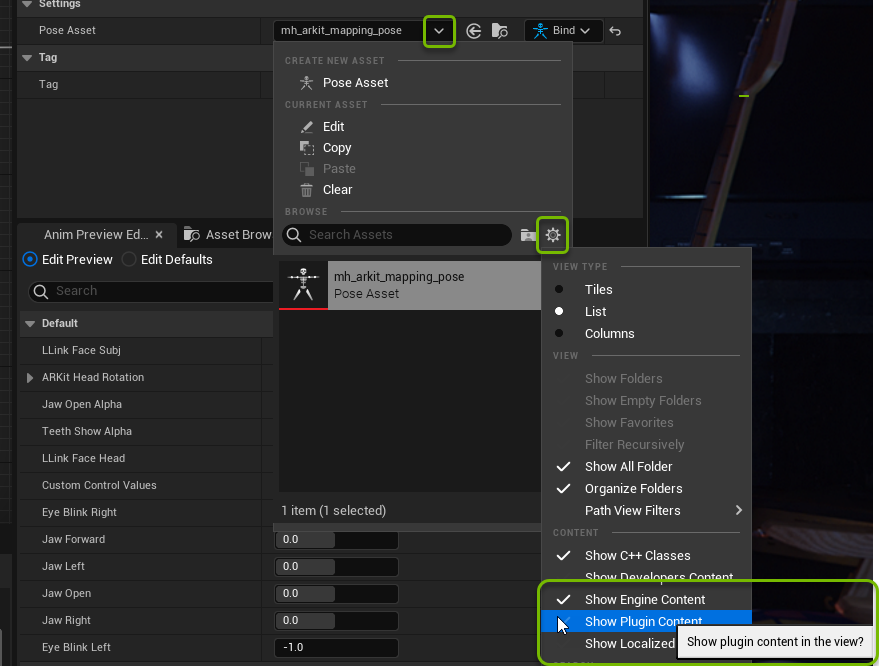

Find the mh_arkit_mapping_pose node and change the Pose Asset to mh_arkit_mapping_pose_A2F:

If this asset is not visible, change the Content visibility to Show Plugin Content:

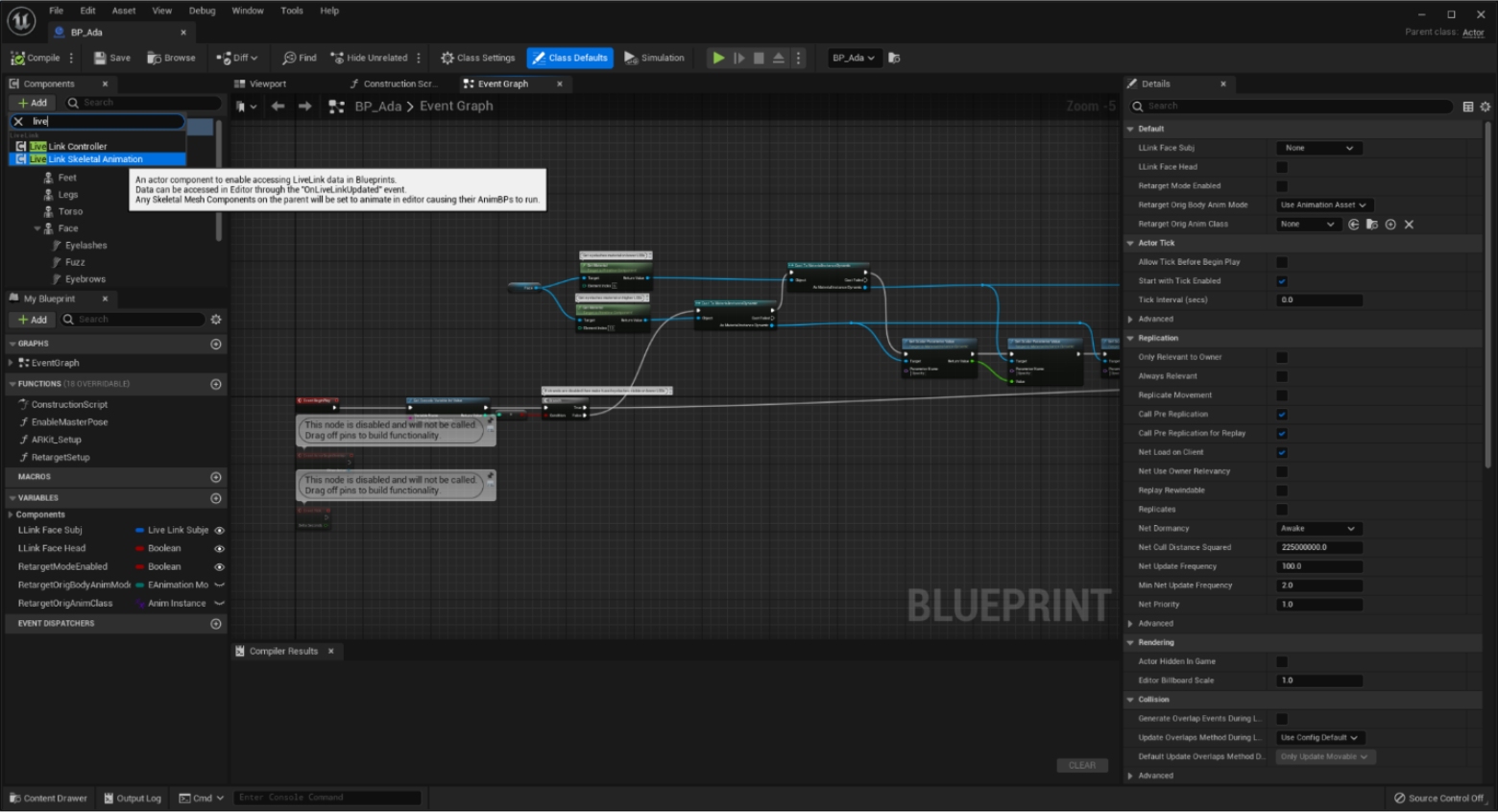

Add Live Link Skeletal Animation Component#

First, open or create a level with a MetaHuman Blueprint. These changes can be made in either the level or in the MetaHuman Blueprint.

Next, add a Live Link Skeletal Animation Component` to the MetaHuman Blueprint. By doing this within the Blueprint Editor, the MetaHuman will always carry this component in the future. Click Compile to compile the Blueprint, click Save to save it, and then close the Blueprint Editor.

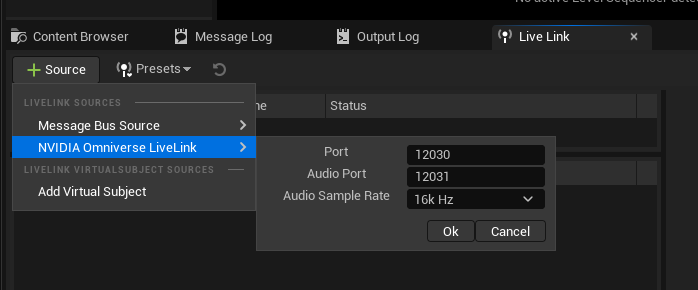

Create an NVIDIA Omniverse Live Link Source#

Open the Live Link window (from the Window > Virtual Production > Live Link menu) and add the NVIDIA Omniverse LiveLink Source:

Option |

Result |

|---|---|

Port |

The listening port to receive streaming animation blendshape weights

|

Audio Port |

The listening port to receive streaming wave audio

|

Audio Sample Rate |

The sample rate of incoming audio stream. ACE is currently configured to send a 16kHz wave to Unreal, so that is default.

- The plugin doesn’t do any audio resampling (from one bitrate to another) so it is imperative that the sample rate matches the wave files that will be streamed to the plugin.

- When resampling the audio playback tends to drift, so resampling support was removed

|

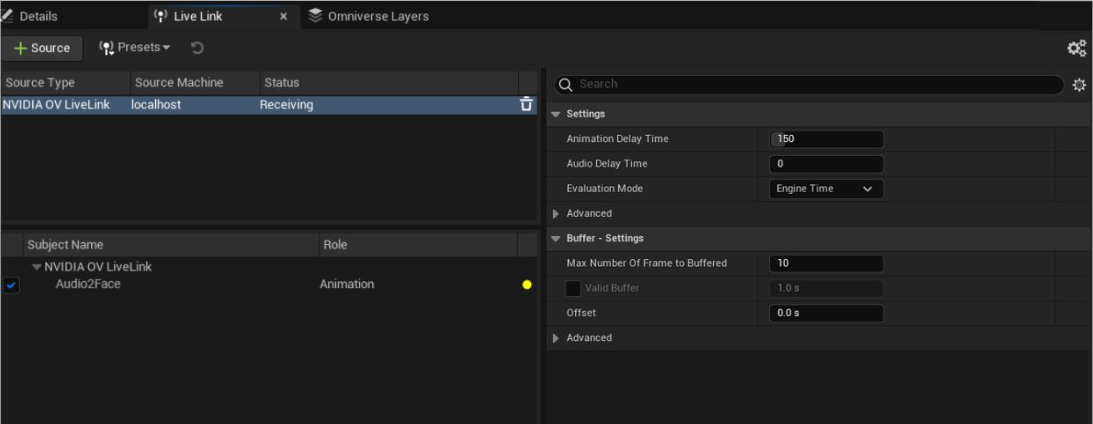

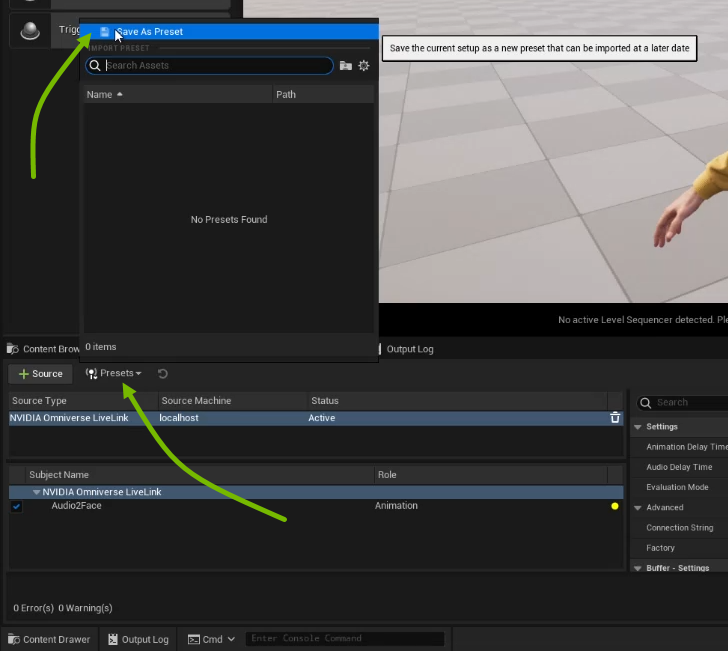

Once the source starts streaming data correctly, you should have an Audio2Face subject like below. Note that Audio2Face is the default name for the subject and is configurable in both the Audio2Face application and ACE:

Option |

Result |

|---|---|

Animation Delay Time |

The animation blendshape weight replay is delayed this many milliseconds to help align audio and animation

|

Audio Port |

The audio replay is delayed this many milliseconds to help align audio and animation

|

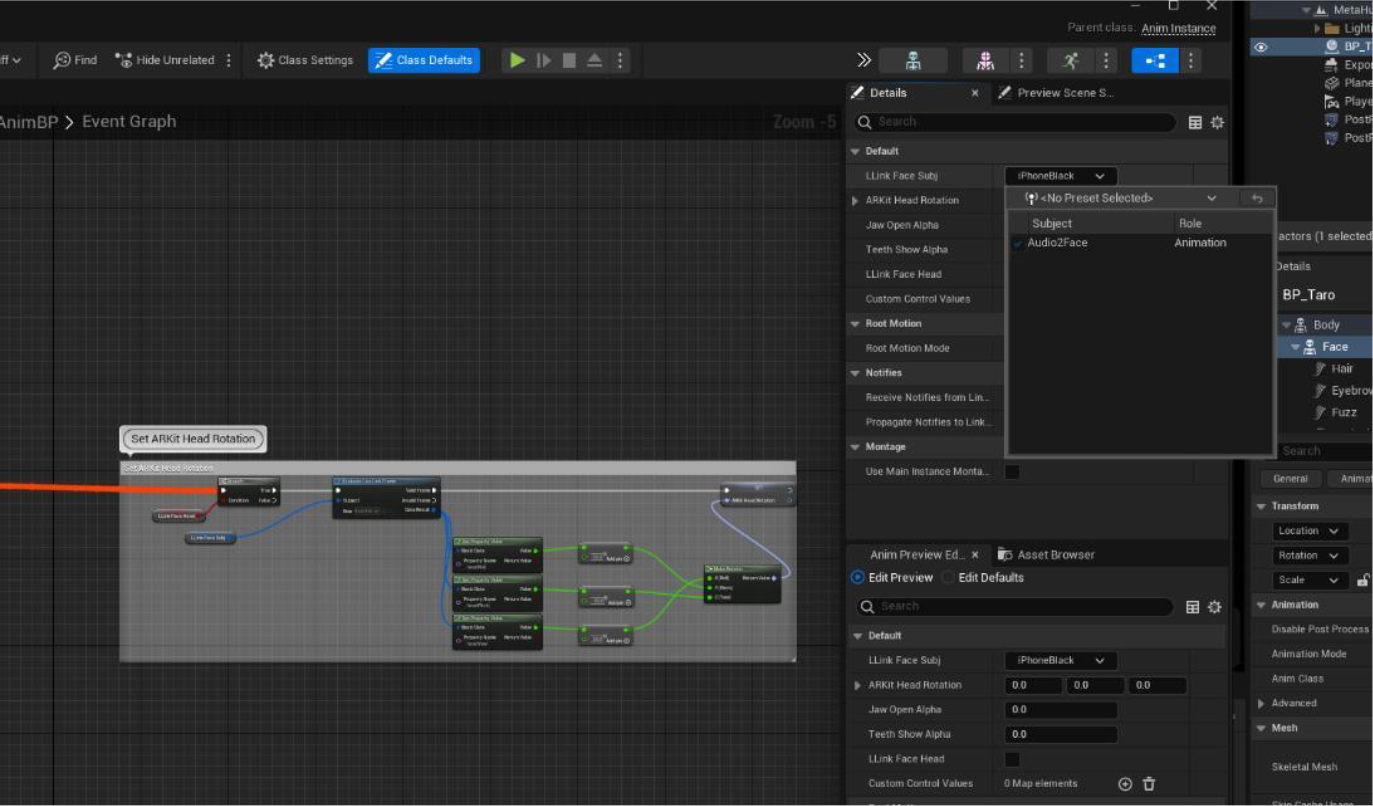

Update Face_AnimBP Blueprint with the Audio2Face Subject Name#

Now that the subject is streaming, the Face_AnimBP Blueprint must be updated with the new Audio2Face subject name. Open the Face_AnimBP Blueprint and change the Default of the LLink Face Subj from iPhoneBlack to Audio2Face. Also, enable LLink Face Head as well. You should see the preview of the MetaHuman update in your Anim Blueprint preview window. Click Compile to compile the blueprint, click Save to save it, and then close the Blueprint Editor.

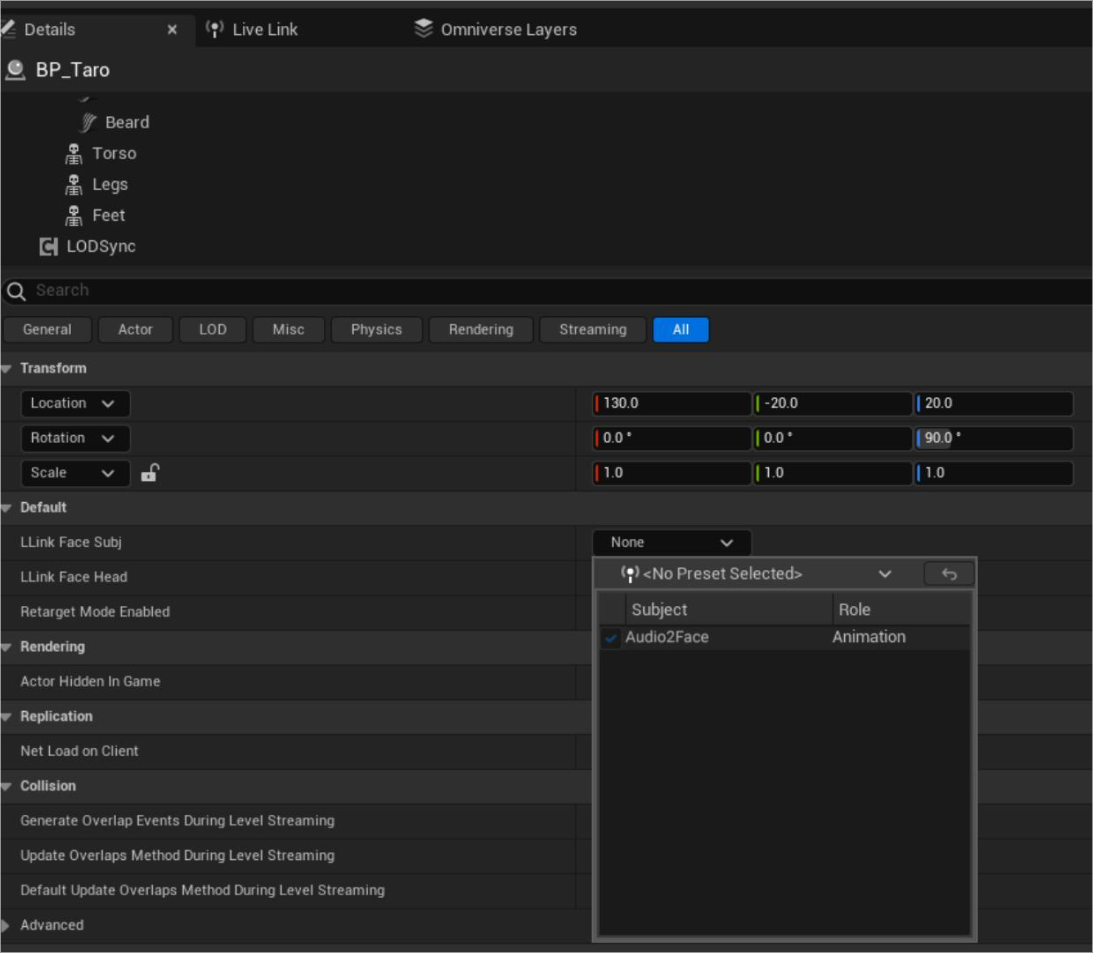

Enable the MetaHuman Blueprint Actor’s LLink Settings#

For Play-In-Editor (PIE) mode to work, these settings should be set in the details tab of the MetaHuman Blueprint. Select the MetaHuman Blueprint in the World Outliner and go to the Details tab. Set the LLink Face Subj to Audio2Face. Also, enable LLink Face Head:

Live Link Setup for Runtime Deployment#

If the goal is to use the Audio2Face Live Link plugin in a packaged experience, or even in -game mode using the editor executable, there are specific steps because the LiveLink Source window isn’t available in this configuration.

Create a Live Link Source Preset#

To access the NVIDIA Omniverse Live Link Source during a runtime experience a LiveLinkPreset asset must be saved. To do this, create the source and save it like this:

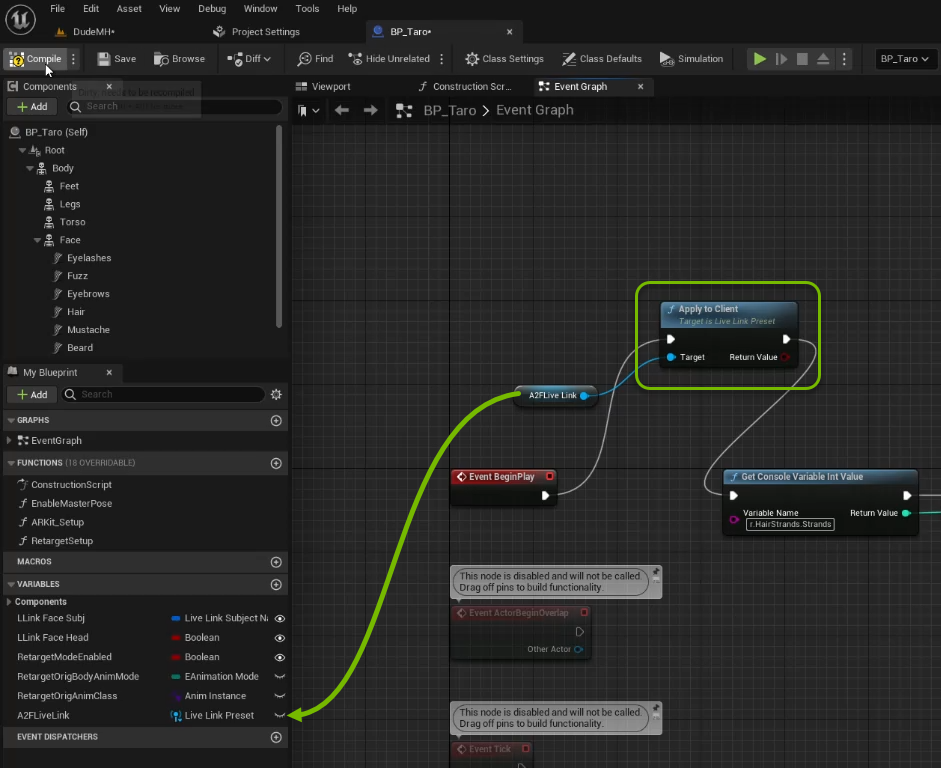

Apply Live Link Preset to MetaHuman Blueprint#

The Live Link Preset can then be applied to a client in the BeginPlay event of the MetaHuman Blueprint. Create a Live Link Preset variable in the Blueprint and pass that to the Apply to Client node:

At this point whenever the game executable is launched it will be listening on the audio and animation sockets for streaming data.

Technical Information#

Audio SubmixListener#

When audio is streamed to the A2F Live Link plugin it is replayed using the SubmixListener within Unreal Engine. This was the most direct way to present audio to the engine, but because it’s not using the ISoundGenerator interface the audio isn’t spatialized or occluded.

Facial Animation and Audio Streaming Protocol#

A detailed description of the streaming and burst mode protocols are in OmniverseLiveLink/README.md. Note that audio and animation packet synchronization only happens when using the burst mode protocol.